Data engineering is the backbone of modern data management. It entails creating, managing, and maintaining systems that gather, store, and analyze vast amounts of data. Imagine having all the data from various sources like social media, websites, and apps neatly organized and ready for analysis. That’s what data engineers do! They ensure that data is accurate, accessible, and usable for businesses to make informed decisions.

Let’s explore the world of data engineering!

What Is Data Engineering?

Data engineering is the discipline that designs and implements the processes for collecting, storing, transforming, and analyzing large volumes of raw, structured, semi-structured, and unstructured data (e.g., Big Data), enabling data science professionals to extract valuable insights. As depicted in The Data Science Hierarchy of Needs, data engineers form the essential groundwork that allows data scientists to conduct their analyses.

Data engineering involves ensuring data quality and accessibility. Data engineers must verify that data sets from various sources (e.g., data warehouses and cloud-based data) are complete and clean before initiating data processing tasks. They also ensure that data users (e.g., data scientists and business analysts) can easily access and query that prepared data using data analytics and tools preferred by data scientists.

Why Is Data Engineering Important?

Companies of all sizes face the challenge of sifting through vast amounts of disparate data to answer critical business questions. Data engineering streamlines this process, enabling data consumers like analysts, data scientists, and executives to inspect all available data reliably, quickly, and securely.

The complexity of data analysis stems from the use of different technologies and various data structures. However, analytical tools often assume uniformity in data management and structure, leading to difficulties evaluating business performance.

For instance, a brand collects diverse data about its customers:

- One system holds billing and shipping information.

- Another maintains order history.

- Additional systems store customer support details, behavioural data, and third-party information.

Although this data collectively offers a comprehensive customer view, the independence of these datasets complicates answering specific questions, such as identifying the types of orders that result in the highest customer support costs.

Data engineering integrates these disparate datasets, allowing you to quickly and efficiently find answers to your questions.

Exciting Facts About Data Engineering – Verified Market Research estimates that the market for Big Data and Data Engineering Services was valued at USD 80.10 billion in 2023 and is projected to reach USD 163.80 billion by 2030, with a compound annual growth rate (CAGR) of 15.48% from 2024 to 2030.

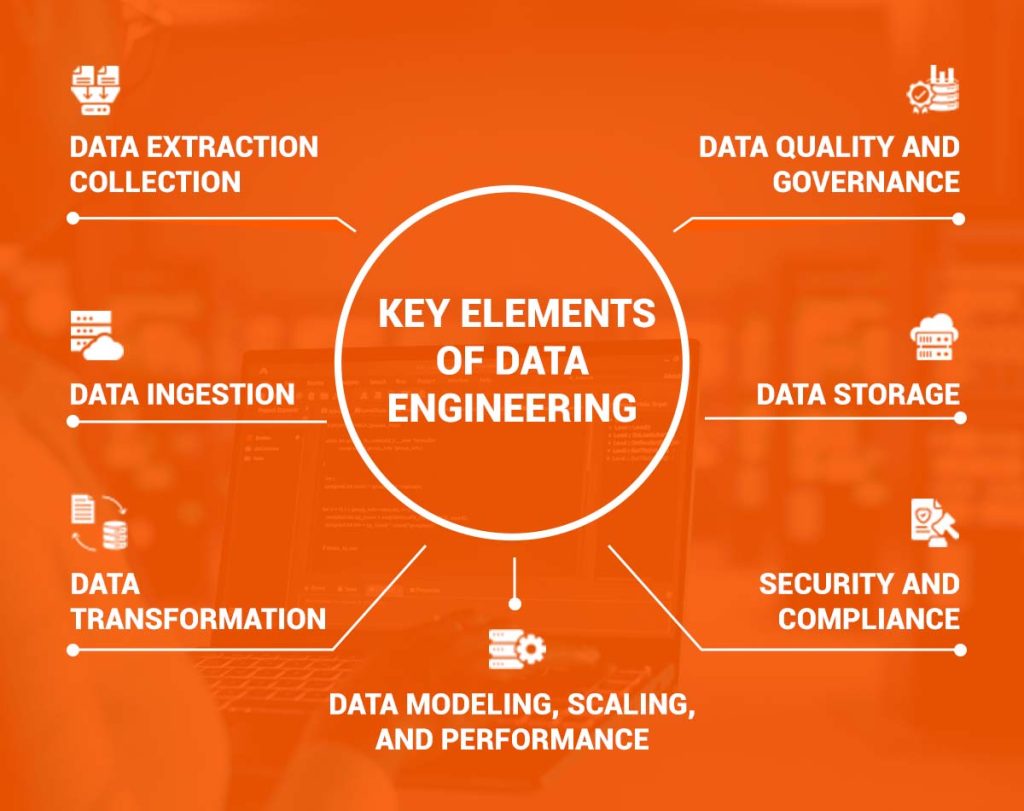

Key Elements of Data Engineering

Both the definition and applications of data engineering are comprehensive. To better understand the discipline, consider the following key data engineering elements.

Data Extraction/Collection: This aspect involves creating systems and processes to extract data of various formats from multiple sources. It includes structured customer data in relational databases and data warehouses, semi-structured data like emails and website content stored on servers, and unstructured data such as videos, audio, and text files in a data lake. The diversity of data formats and sources is practically infinite.

Data Ingestion: This involves identifying data sources and performing data validation, indexing, cataloguing, and formatting. Given the enormous volumes of data, data engineering tools and processing systems are used to expedite the ingestion of these large datasets.

Data Storage: Data engineers design storage solutions for ingested data, ranging from cloud data warehouses to data lakes and NoSQL databases. Depending on the organisation’s structure and staffing, they may also manage data within these storage solutions.

Data Transformation: To make data useful for data scientists, business intelligence, and analytics, it must be cleaned, enriched, and integrated with other sources. Data engineers develop ETL (extract, transform, load) pipelines and integration workflows to prepare large datasets for analysis and modelling. Tools such as Apache Airflow, Hadoop, and Talend are used based on end users’ data processing needs and requirements, such as data analysts and scientists. The final step is loading the processed data into systems to generate valuable insights.

Data Modeling, Scaling, and Performance: Defining and creating data models is crucial in data engineering. Machine learning models have been employed to enhance data volume management, streamline query load handling, and improve database performance and scaling infrastructure.

Data Quality and Governance: Ensuring data accuracy and accessibility is essential. Data engineers establish validation rules and processes to maintain data integrity and comply with organizational governance policies.

Security and Compliance: Data engineers ensure that security measures prescribed by organizational cybersecurity protocols and industry-specific data privacy regulations (e.g., HIPAA) are met, ensuring all systems are compliant.

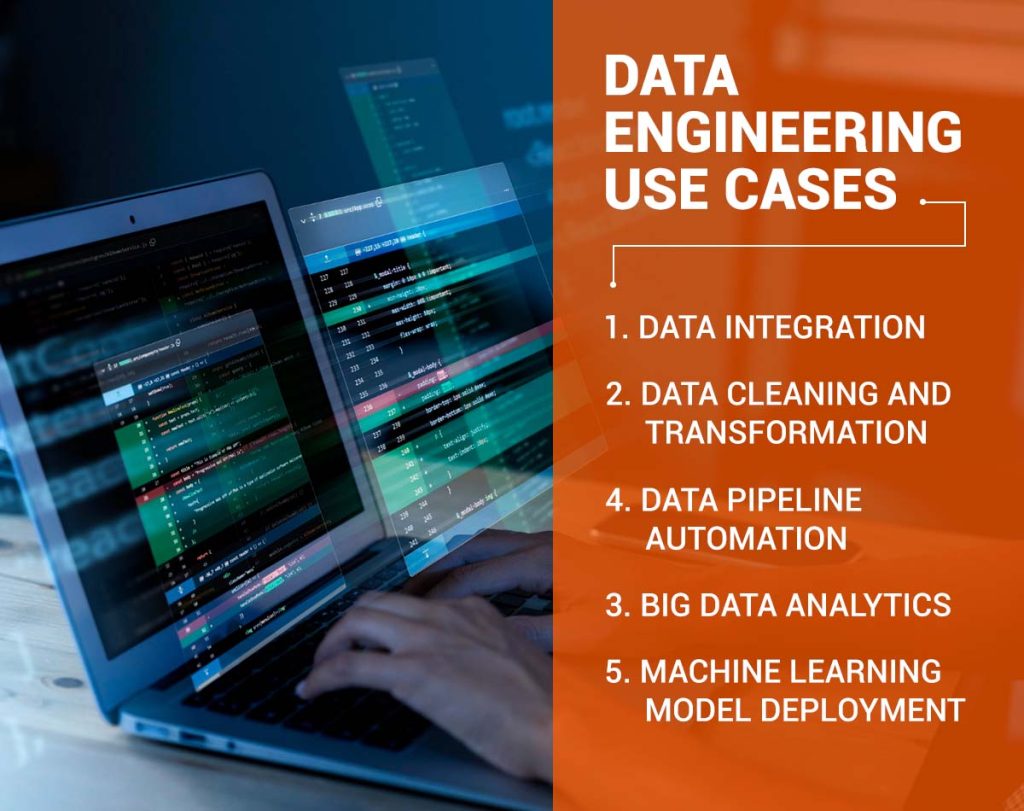

Data Engineering Use Cases

Data engineering is crucial to managing and utilizing large volumes of data. Here are several critical use cases where data engineering plays a vital role:

1. Data Integration

Use Case: Integrating data from multiple sources into a cohesive and accessible format.

- Scenario: A retail company collects data from various sources, such as online sales, in-store transactions, and customer loyalty programs.

- Solution: Data engineers use ETL (Extract, Transform, Load) processes to integrate these disparate data sources into a centralized data warehouse.

- Outcome: This integration allows for a unified view of customer behaviour, enabling better marketing strategies and inventory management.

2. Data Cleaning and Transformation

Use Case: Ensuring data quality and consistency across the organization.

- Scenario: A healthcare provider collects patient data from multiple clinics using different formats and standards.

- Solution: Data engineers develop pipelines to clean, standardize, and transform this data into a consistent format.

- Outcome: Clean and standardized data ensures accurate patient records and improves the quality of care.

3. Big Data Analytics

Use Case: Analyzing large volumes of data to extract valuable insights.

- Scenario: An e-commerce platform wants to analyze customer browsing and purchase patterns to personalize recommendations.

- Solution: Data engineers build and maintain data lakes and use tools like Hadoop and Spark for big data analytics.

- Outcome: Personalized recommendations increase customer engagement and sales.

4. Data Pipeline Automation

Use Case: Automating data workflows to improve efficiency and reliability.

- Scenario: A logistics company needs to process and analyze shipping data from various carriers daily.

- Solution: Using orchestration tools like Apache Airflow, data engineers create automated data pipelines.

- Outcome: Automated data workflows reduce manual intervention, ensuring timely and accurate data processing.

5. Machine Learning Model Deployment

Use Case: Deploying machine learning models into production for predictive analytics.

- Scenario: A marketing agency wants to deploy a churn prediction model to identify customers likely to leave.

- Solution: Data engineers work with data scientists to deploy the model using MLOps practices, ensuring scalable and reliable model operations.

- Outcome: The deployed model helps the agency proactively address customer churn, improving retention rates.

What Drives the Rising Popularity of Data Engineering?

The growing need for data-driven insights and informed decision-making in businesses is fueling the popularity of data engineering. As companies gather vast amounts of data, the need for skilled professionals to design, build, and manage efficient data pipelines and infrastructures is growing. Advances in big data technologies, cloud computing, and machine learning also contribute to enabling efficient data processing and analytics.

Moreover, the emphasis on data quality and real-time processing fuels the demand for data engineering expertise, helping businesses harness their data effectively for competitive advantage and strategic growth.

Why Choose Nirvana Lab for Data Engineering

Nirvana Lab stands out in data engineering with our expertise in crafting customized data management solutions. We design and implement Data Lakes for storing raw data that supports quick analytics and machine learning and offer Data Warehousing solutions for scalable and efficient data storage. Our team also excels in Data Virtualization, providing flexible access to data from various sources. We assess your needs, such as data security, quality, and performance, to recommend the best solutions. With our deep experience and innovative approach, Nirvana Lab ensures your data infrastructure is robust, adaptable, and ready for the future.

Frequently Asked Questions

What is Data Engineering?

Data Engineering is the discipline focused on designing, building, and maintaining systems for collecting, storing, and processing large volumes of data. It involves ensuring data quality, accessibility, and usability for analysis. Data engineers create and manage data pipelines, storage solutions, and transformation processes to support data science and business intelligence efforts.

Why is Data Engineering important for businesses?

Data engineering is essential as it enables businesses to handle and leverage vast quantities of data efficiently. By integrating disparate data sources, cleaning and transforming data, and ensuring data accessibility, data engineering enables accurate and timely analysis. This facilitates informed decision-making and helps businesses answer critical questions, optimize operations, and gain competitive advantages.

What makes Nirvana Lab a good choice for Data Engineering services?

Nirvana Lab is an excellent choice for Data Engineering due to its expertise in creating customized data management solutions. They offer robust Data Lakes, scalable Data Warehousing, and flexible Data Virtualization services. Their team assesses data needs, ensures security and quality, and provides innovative solutions to support business growth and future needs.

What are some key elements of Data Engineering?

Key elements of Data Engineering include collecting data from different sources, ensuring it is stored properly, and cleaning it to be useful for analysis. Data engineers also set up systems to manage this data, make it easy to access, and ensure it is accurate and secure.

What are some everyday use cases for Data Engineering?

Common uses for Data Engineering include combining data from various sources into one place, cleaning and organizing data to make it consistent, analyzing large amounts of data to find useful insights, and automating the processes involved in handling data. It also includes setting up systems to use machine learning models for predictions.